AI

Doomerism

is a

Business

Tactic

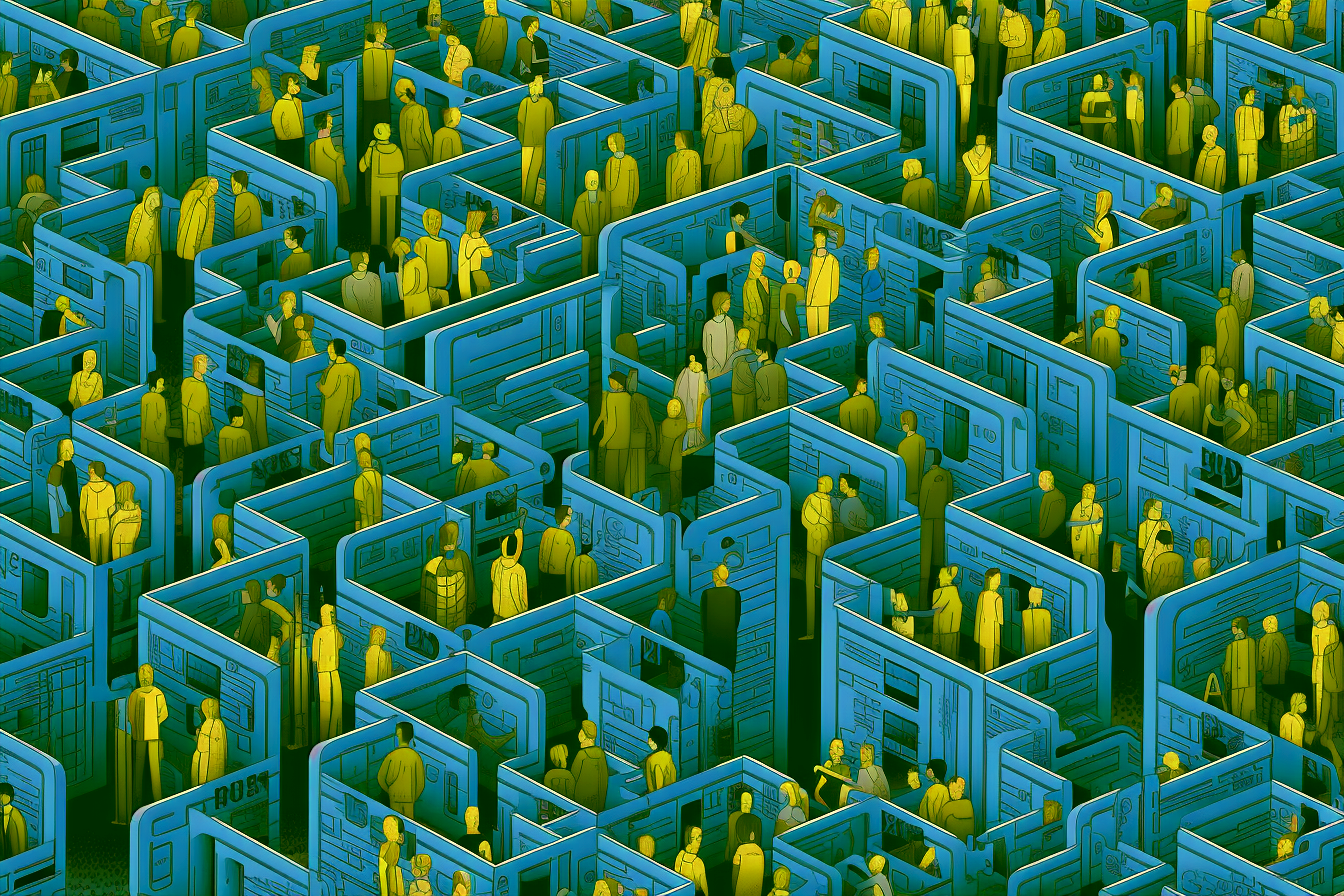

Recent warnings from tech leaders that AI could lead to the extinction of humanity have raised eyebrows and reminded me of rhetoric that supports the US military-industrial complex. The apocalyptic scenarios put forth by AI industry titans like Sam Altman may be giving birth to an “AI-industrial complex,” driven by hyperbole rather than a rational evaluation of AI’s potential destructive power.

A Familiar Narrative

The parallels between the AI extinction talk and the fear-mongering that powers the military-industrial complex are striking. The military-industrial complex refers to the close relationship between the defense industry, the military, and the government. It suggests that these entities have a vested interest in perpetuating a state of war or the fear of external threats in order to maintain their power and profitability. By exaggerating or fabricating dangers, those in the military or defense industry can justify increased military spending, weapon development, and the expansion of military influence.

Similarly, fear-mongering about the risks of AI may amplify or embellish the potential danger of the technology. While it is important to acknowledge and address the ethical and safety concerns surrounding AI, over-the-top speculation can lead to exaggerated narratives that overshadow its potential benefits and hinder progress in the field. It can also shape public opinion and policy decisions, potentially resulting in restrictive regulations or unnecessary limitations on AI development.

The Emergence of an “AI-Industrial Complex”

The concept of an AI-industrial complex refers to the amalgamation of influential entities, including corporations, government agencies, and media outlets, that profit from fear and exaggeration surrounding AI’s potential dangers. This complex capitalizes on the public’s fascination with doomsday scenarios and, ironically, fuels the demand for AI-related products, services, and research by depicting these technologies as all-powerful.

The calls for regulation in the face of this supposedly extinction-level threat are hollow. Regulatory oversight often favors incumbents who can leverage their money and power to push for oversight that is more favorable to them, so it makes sense that many giants in the AI space are now calling for legislators to take a closer look.

It’s imperative to question the motivations behind such rhetoric and consider whether it serves the best interests of society or simply acts as a vehicle for self-interest.

Fostering a Balanced Dialogue

The dangers of AI should not be disregarded, as responsible discussions around ethics, privacy, job displacement, and algorithmic bias are crucial. However, it is equally important to maintain a balanced dialogue that separates legitimate concerns from alarmist speculation. Painting all AI advancements with a broad brush of impending doom stifles innovation and instills unnecessary fear in the public.

To avoid falling into the trap of an

AI-industrial complex, we must encourage critical thinking, evidence-based analysis, and multidisciplinary collaborations. Thought leaders, policymakers, and the media should prioritize objective assessments of AI’s risks and benefits.

Drawing parallels between the AI extinction talk and the military-industrial complex should serve as a reminder to exercise caution and skepticism in the face of hyperbolic scenarios. In both cases, there is a potential for vested interests to exploit and manipulate public fear for their own gain. The military-industrial complex thrives on the perpetuation of fear to maintain its influence, while fear-mongering about AI risks can serve the interests of individuals or organizations seeking to control or shape the development of AI technologies and their regulation.

By drawing this parallel, we can recognize the potential for fear-based narratives to shape public opinion and policy decisions in both the military-industrial complex and the AI domain. It highlights the importance of critical thinking, transparency, and ethical considerations in navigating these complex issues.

Do you agree with this?

Do you disagree or have a completely different perspective?

We’d love to know