AI transformation rarely happens in a single leap. Instead, it evolves through a series of incremental, often messy, small-scale shifts.

Toby Daniels

03•04•25

FEATURE

The Sledgehammer of Change

There can be a lot of light in and at the end of a transformation tunnel.

The Meaning of Photoshop

The Digital, Invisible, and Ruthless Device of Destruction

Chris Perry

03•03•25

ON_DISCOURSE SUMMIT

Round One of Summit Speakers

No one comes to an ON_Discourse event to hear our team speak. They want to know what the real experts are doing.

Dan Gardner

Why small, tactical shifts lead to big impact

Katherine von Jan

The rise of Agentic Managers

Don McGuire

How a global marketing team is integrating AI

Mark Howard

The AI guardrails every business needs

NEWSLETTER

Agents are more like staff than software.

One of our members started to market her AI agent as a person to hire rather than a piece of software to buy. This is either a meaningless semantic distinction or a new business model. We dig into it here.

As AI makes perfect self-presentation available to everyone, the value of that perfection plummets.

Henrik Werdelin

02•28•25

NEWSLETTER

AI is bigger than efficiency.

For over a decade, the unicorn was the point of entrepreneurism. That made sense in an era of platforms. The emergence of AI, specifically agents and generative models, has created an opportunity that might change not only the game but also the mascot. Enter the donkeycorn, the antidote to hyperbolic entrepreneurship.

PODCAST

Episode #008

Dan and Chmiel invite Henrik Werdelin, co-founder of Barkbox and PreHype, to talk about how unicorns are old news and how donkeycorns are the future. You’ll hear about how Agentic AI can prompt wannabe entrepreneurs into actual founders. This is a small idea that can transform the economy.

NEWSLETTER

Solve Small

AI is too big to “do.” It needs to be broken down into small functions, frameworks, and features that can solve real business problems. In other words: solve small.

PODCAST

Episode #007

Toby, Dan, and Chmiel officially announce The ON_Discourse Summit - Solve Small: AI Transformation for the C-Suite.

NEWSLETTER

The discourse about AI transformation is too big, vague, and theoretical.

We’re working on a new project. It is built on this idea of small thinking and AI transformation. Over the next 6 weeks, we will be provoking and discoursing about the real, actionable ideas that industry leaders are actually doing that will realize the epic future we all keep describing.

PODCAST

Episode #006

Dan returns to the podcast to talk about the off-the-record conversations he had at Davos. Toby and Chmiel use the LinkedIn post he wrote about his trip to scrutinize the level of discourse about AI transformation. The key takeaway: no one knows what happens next.

NEWSLETTER

Why are you acting like this will ever make sense?

You are not living in conventional times. You are living on the fault line of a new technological epoch where the tectonic plates of platforms, workforces, data, and content are all fracturing underneath our feet. Get used to earthquakes.

PODCAST

Episode #005

Toby and Chmiel reflect on the future of managers. They ponder the value of the role and whether AI can enhance or replace it. Perspectives from ON_Discourse member Katherine von Jan, founder of tough.day, reveal a new set of opportunities to help employees and organizations thrive in the AI era.

NEWSLETTER

Managers are not just obsolete; they are harming your organization.

Managers have always been the low-hanging fruit of office culture. Easy to pick on, complain about, and parody. Now, thanks to AI, they are about to get permanently plucked.

PODCAST

Episode #004

Toby, Dan, and Chmiel argue about Boardy, an AI-bot that crawls LinkedIn, calls you on the phone, asks you personal and probing questions, listens and interprets your answers, and then makes contextual introductions to similar strangers from different networks. Is this what we are talking about when we talk about the future of AI?

CES 2025

What Happens in Vegas... Gets Recapped

We hosted floor tours about the Agentic Era. We recorded the discourse with over 120 executives. We asked Elon Musk why the Internet Sucks. If you want to know what happened at CES, we got you covered.

Download the Report

We partnered with Stagwell this year to summarize the discourse from our tours

Subscribe to the Newsletter

Chmiel says goodbye to Vegas, to gadgets, and hello to the agentic web

NEWSLETTER

AI is starting to get useful

This week’s dispatch covers the trends we noticed in our research, the exhibitions we are focusing on, and the perspectives we are hearing from the industry leaders who we are guiding through the CES floor.

CES 2025

January 8, 10:30pm ET, 7:30pm PT • @Live

We're excited to announce that ON_Discourse is participating in a live Q&A with Elon Musk and Stagwell Chairman and CEO, Mark Penn during CES 2025.

During the conversation, our co-founders Toby Daniels and Dan Gardner will have an opportunity to ask Elon a question about the state of the internet and how Elon thinks we can fix it.

CES 2025

Floor Tours

Agents are the quiet revolution reshaping our digital lives. Join us and Stagwell at CES on the Agentic Era Tour and explore how AI agents are not just tools but transformative companions in healthcare, work, and the home.

Toby Daniels

12•20•24

SPECIAL ISSUE

What can agents do for your business?

How can you increase revenue 5X without building a new tech stack? We report back from an AI expert on the simple path to growth.

NEWSLETTER

Future-Proofing the web is making it worse

We took optimization too far. We turned the magic of the early internet into an endless spreadsheet. An analysis of how the concept of future-proofing web design has slowly degraded the wonder and experience of the internet. Can it be fixed?

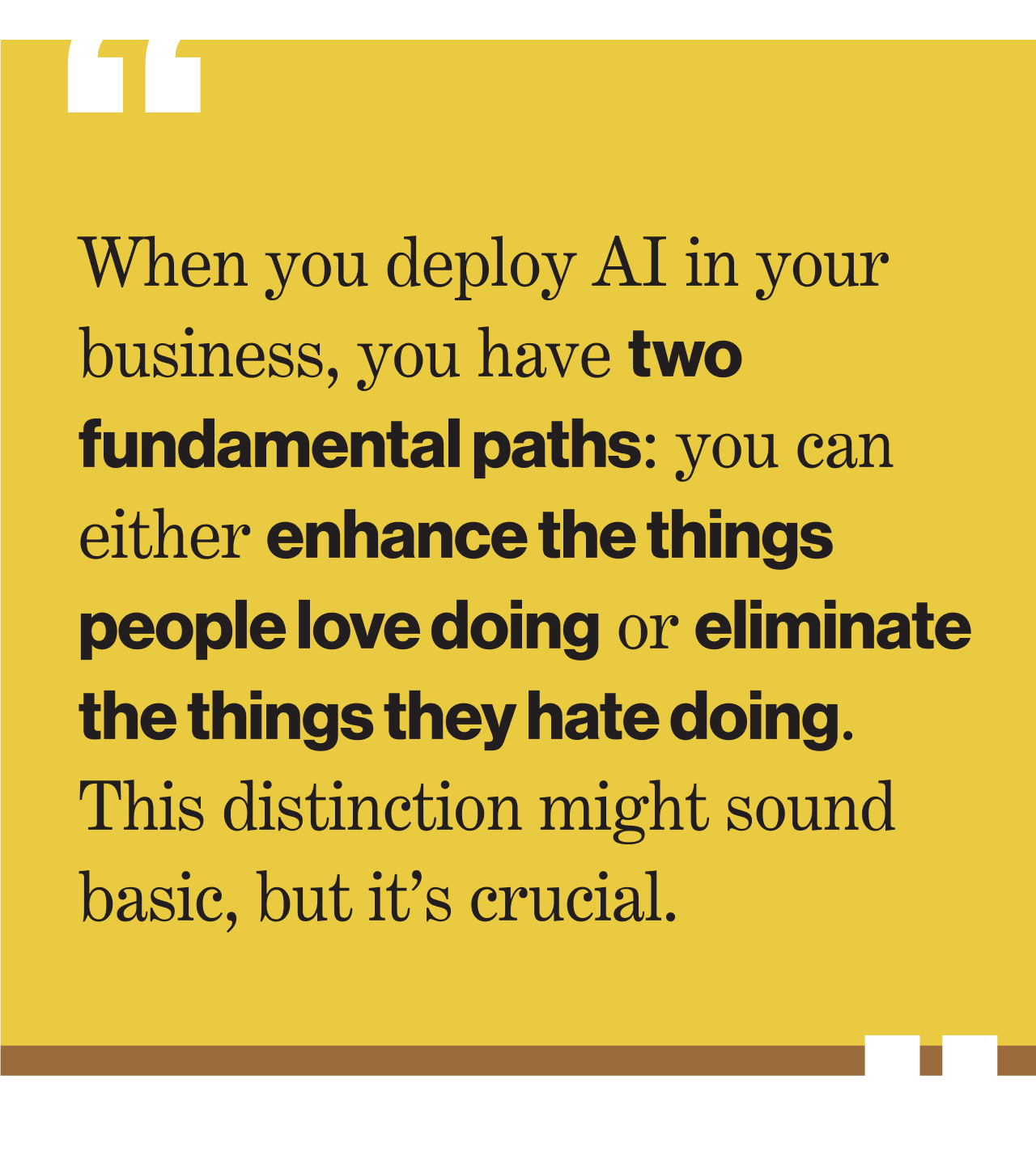

PERSPECTIVE

How to evaluate the effectiveness of AI investment

Do what you love and let AI do the rest

NEWSLETTER

Weekly Provocation: Media is disrupting AI, too

A dispatch from a NYC conference about AI and the media reveals a complicated relationship between media and AI. The media industry is more than interested; they are frustrated by the limitations of AI. Specifically, the loading time of AI summaries is a big problem.

PODCAST

Episode #002

Toby and Dan talk about their trip to Lisbon for Web Summit, legal protections for agents, and why the internet sucks (and how it could be better).

IN THE TRADES

Beware of Hype

What if that sudden surge of attention and sales comes is not the thing you were looking for all along? One of our founding members bursts the hype bubble in Design Week UK.

FEATURE RECAP

2nd Annual EOY Provocations Zoom

Predictions are boring. What if we prepared for 2025 with provocations instead? For the second year in a row, we asked our members to provoke the new year with a series of questions, statements, and feelings.

Twelve Provocations About 2025

Matt Chmiel

11•29•24

NEWSLETTER

AI Compliance will be codified by 2026. Are you ready for it?

Who is liable when an AI agent says the wrong thing? The platform that hosts it, the brand that uses it, the developer that programmed it, or the model that trained it? While the black box of AI makes for good legal discourse, you should pay attention if you are developing an agent.

RECAP

AI and the Law

Are agents protected under Section 230? What is the deal with the Colorado AI Act?

Matt Chmiel

11•15•24

This expert thinks the hottest thing in eCommerce is better site search.

Matt Chmiel

11•08•24

NEWSLETTER

The emotional web is a gold mine.

An emotional web can help us understand ourselves better; understand how we can change our habits, and do it without judgment and with empathy. What if we were able to do this without pharmacology? This is the opportunity that lies ahead.

PODCAST

Introducing the ON_Discourse Podcast

Our team preps for an upcoming summit about the future of the connected home. Can tech bring families together or will smart-toasters rule the home?

NEWSLETTER

Nothing can stop AI-generated media.

We are going from a mass-media content supply chain to a personalized one. Tomorrow, 90% of what we consume will be personalized, generated, and unique to each individual. You may not want it. You may not like it. You may think it will start a civil war. The one thing you won’t be able to do is stop it.

RECAP

Inevitable AI?

A room full of executives argues against an unstoppable AI invasion.

Matt Chmiel

10•10•24

FEATURE

Does AI Get Brands?

Can an LLM truly understand and then replicate the values that made good brands great?

James Cooper

FEATURE

Your Dumb House

When will all of our connected devices start making sense? A new era of connectivity is coming.

Overheard at ON_Discourse

MEMBER EXPERIENCE

October 10, 9 – 11am • Gemma NYC

We’re bringing together business leaders and AI experts to debate the question in the context of entertainment and marketing.

RECAP

SaaS is a Trick

You spend a lot of money to stay in what this founder called “data jail.” Can AI break you out of expensive SaaS contracts?

Matt Chmiel

10•03•24

NEWSLETTER

AI Is the Ultimate Manager

People are weird. They have feelings and those things are a mess. Despite this factory flaw, we have collectively decided that the best way to turn emotional beings into productive workers is to manage them… with other people. What does the future org-chart look like if AI replaces conventional management tasks?

How AI is integrating into the workplace. The good, the bad, and everything in between.

Matt Chmiel

09•27•24

RECAP

Holy F*ing Sh*t

A member tells us what it felt like to build a functional CRM prototype in 3 hours. And Donkeycorns.

Matt Chmiel

09•19•24

FEATURE | INNOVATION IN AI

The Simulation Era

Design thinking is going to be replaced with AI-generated simulation technology. What does that look like? We wrote a multi-part series about it.

NEWSLETTER

Who Really Won the Olympics?

We covered this in a recent newsletter. No brands seemingly won the games, and gold medals didn’t have the same luster as memes.

MEMBER RESPONSE

Culture Won in Paris

Hip Hop and American culture dominated the games. They were the big winners. Read it here.

Carlos Mare

09•10•24

MEMBER EXPERIENCE

September 10, 9 – 11am • The Standard NYC

The pace of change is accelerating, so where are the blindspots that make it dangerous to navigate?

MEMBER EXPERIENCE

Everything you can expect from an ON_Discourse event, only smaller. Every Thursday 12-1EST

SPECIAL REPORT

The future Internet is being written, and it’s going to be weird, wild and most likely, even more batshit crazy than its predecessor. If you want to understand how to successfully ride the next wave, join us for a breakdown of everything we have learned, and the incredible perspectives shared by over one hundred leading experts in tech and business.

LATEST FROM OUR NEWSLETTER

Weekly Provocation: Virality Defeated Marketing in Paris.

Snoop defeated the brands. His mystifying behavior generated its own gravitational force. NBC deserves credit for setting him free on their platform, where he simultaneously commentated on badminton, dressage, danced at gymnastics, and randomly appeared throughout the event.

We have thousands of subscribers. Join Them

FROM A RECENT GROUP CHAT

What Are We Getting Wrong About AI?

Our members are bullish on the value of Gen AI.